- Lowpass

- Posts

- Meta wants to upload the real world

Meta wants to upload the real world

Also: For Netflix, live is live

Hi there! My name is Janko Roettgers, and this is Lowpass. This week: Meta is getting serious about Gaussian splatting, and Netflix’s streaming data reveals how people watch live events.

Meta is getting ready to launch photorealistic Horizon Worlds in beta

Meta’s plans for the metaverse are about to take an interesting new direction: The company is working on ways for people to upload digital clones of their real-world environments to the cloud, and then stream those environments to VR headsets.

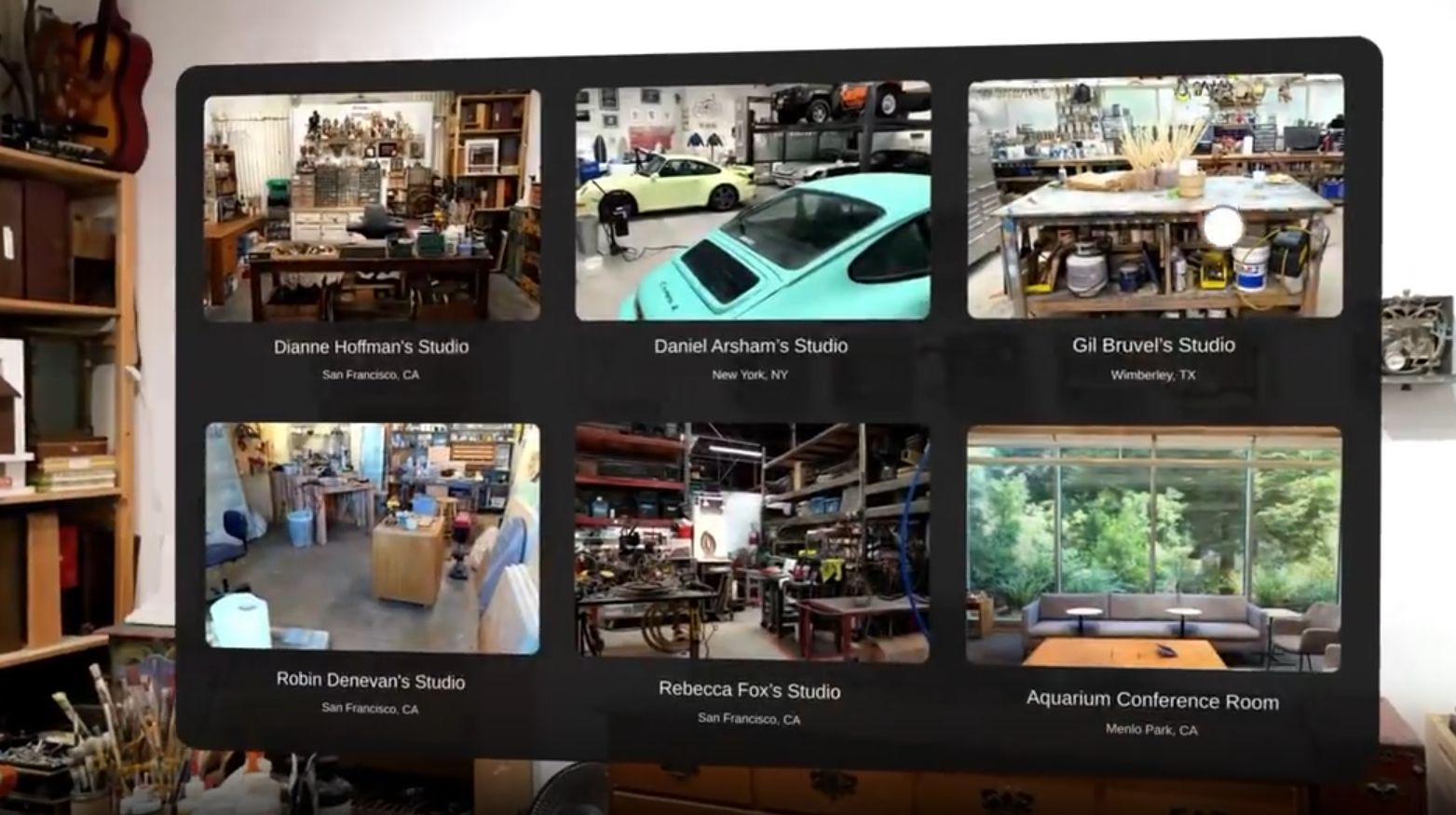

Meta first began exploring the use of 3D-captured spaces in VR last year, when it unveiled a demo app called Hyperscape at its Meta Connect developer conference that lets VR users explore six distinct spaces – five artist studios, and Mark Zuckerberg’s former office.

Ahead of this year’s Connect, there are signs that Meta is significantly expanding this work: Recent findings first reported by UploadVR suggest that the company is getting ready to unveil something called “Meta Horizon Photoreal” in beta. Horizon Photoreal appears poised to be directly integrated into a new version of Horizon Worlds, the company’s social metaverse service.

What’s more, Meta’s Horizon Mobile app reportedly already includes strings that suggest Meta is letting its employees scan their own surroundings, and upload them to the cloud, to use as digital replicas for Horizon Photoreal.

When contacted for this story, a Meta spokesperson didn’t comment directly on Horizon Photoreal, but said that the company had been “making good progress on creating experiences and spaces that transform everyday media into something possible only in VR.” This also included recent efforts to make regular Instagram content available in 3D when viewed with a VR headset, according to that spokesperson.

Streaming the world, one splat at a time

Meta’s Hyperscape demo is based on Gaussian splatting, a relatively new way of capturing objects and spaces that can then be explored in 3D. I wrote a longer story about Gaussian splatting for The Verge earlier this year; in essence, it allows people to move their phone around an object to capture it from all angles.

The viewpoints captured that way then get transformed into a bunch of 3D data that ultimately allows viewers to lean in, and even walk around an object, to explore it freely. There are already a number of apps that let people capture objects and scenes as Gaussian splats, including Scaniverse from Niantic Spatial.

Now, it appears that Meta is working on directly adding scanning to its Horizon mobile app. Strings found by prolific reverse engineer Luna Yiann suggest that Meta employees already have access to a “Hyperscape capture” menu item within the app. They’re also being advised that they can only capture a limited number of spaces in 3D, and about ways their captured data may be used to improve the product.

All of this is very much in line with what I was told by Meta employees last year. The company’s former Horizon OS and Quest VP Mark Rabkin told me at Connect that Hyperscape splats were already running on an engine “that’s pretty much the Horizon engine.” Asked whether Horizon users will eventually be able to scan their own living rooms, and then upload digital replicas of those spaces to Horizon, Rabkin said: “That’s what we’re working toward.”

Is it ready for the masses – or Hollywood?

The big question is: Who will be able to capture spaces once Horizon Photoreal launches, and what kind of limits will be in place for both uploading and accessing those spaces?

In its current iteration, Hyperscape offers high-definition captures of entire rooms, which users can freely move around in. Meta has kept mum on how exactly these spaces have been captured and how much editing was involved in getting those captures ready for the world.

When I interviewed Niantic Spatial CTO Brian McClendon for my The Verge story last year, he mused that the demo likely involved substantial editing. “That doesn’t scale, but it looks really good,” McClendon said at the time. He added that he didn’t know whether Meta had a path towards scaling Hyperscape in the future.

We’ll likely have to wait until Meta Connect in September to find out how the company plans to bring a photorealisting metaverse to the masses. I don’t have any inside knowledge, but wouldn’t be surprised if Meta was going to use generative AI to deal with some of the editing issues, and perhaps limit publishing to a smaller circle of users at launch.

One thing is already clear: Adding Gaussian splats to the metaverse opens up a whole range of possibilities. End users could scan their living rooms, and use those digital clones to host friends in the metaverse. Entertainment companies could use the technology to scan film sets, bands could scan practice rooms and studios, museums could easily let people from around the world explore their exhibits, and much more.

With all those possibilities looming on the horizon (pardon the pun), some aren’t waiting for Meta to bring splatting to the metaverse. Ukrainian VR developer Mykhailo Moroz recently figured out a way to import Gaussian splats into VRChat, where users can now explore some of his splats as 3D worlds.

Moroz also just happens to be the lead scientist of the Ukrainian VFX startup Zibra AI, which builds real-time volumetric effects for virtual production. His VRChat hack appears to be a private passion project not directly related to his work. However, one doesn’t have to squint very hard to imagine a future in which VFX artists capture 3D assets and spaces with their phones, preview them with their coworkers in the metaverse, and then import them into professional production environments.

Enjoyed reading this story? Then please consider upgrading to the $8 a month / $80 a year paid tier to support my reporting, and get access to the full Lowpass newsletter every week.

SPONSORED

Stay up-to-date with AI

The Rundown is the most trusted AI newsletter in the world, with 1,000,000+ readers and exclusive interviews with AI leaders like Mark Zuckerberg, Demis Hassibis, Mustafa Suleyman, and more.

Their expert research team spends all day learning what’s new in AI and talking with industry experts, then distills the most important developments into one free email every morning.

Plus, complete the quiz after signing up and they’ll recommend the best AI tools, guides, and courses – tailored to your needs.

Want to get your company in front of an audience of over 15,000 tech and media insiders and decision makers? Then check out these sponsorship opportunities.

Image courtesy of Netflix

Most of Netflix’s live content is being viewed, well, live

Ever since Netflix started to stream live events, I’ve been wondering: How does the consumption of this kind of content change when it is being released on a service largely known for on-demand viewing? How much of a potential long tail shelf life does live content have on Netflix, where recordings of live events often remain available for months, and potentially even years?

Or to put it differently: How much of this stuff is actually being watched live?

Turns out most of it.

That’s the result of some number crunching I did this week based on Netflix’s most recent “What we Watched” data dump, which the company released in tandem with its Q2 earnings report last week. In that report, Netflix details how many views and viewing hours most of its movies and shows have amassed over the first six months of this year. For live events, this data includes both live and on-demand viewing.

Live programming is still far and few between on Netflix, so I decided to take a look at something with a fairly regular cadence: WWE Monday Night Raw, which has been airing live on Netflix every week since January.

Netflix doesn’t release live viewership data for each match. However, thanks to the meticulous work of the fine folks at Wrestlenomics, we do know how many people tune in every week. Wrestlenomics doesn’t have access to real-time data, but the site regularly scours Netflix’s weekly charts, resulting in data it calls “similar to a worldwide DVR+7 household measurement.” I guess we could call it live-ish.

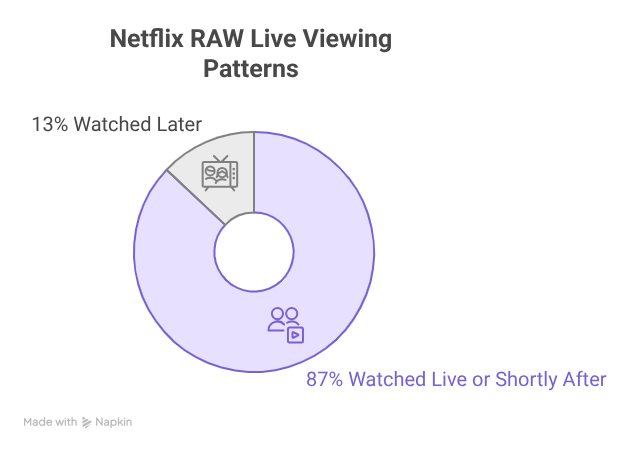

Comparing that data to the numbers released as part of Netflix’s data dump, I was able to determine that on average, 87% of Netflix’s Raw streams are being watched live or during the first few days immediately following their broadcast.

As one might expect, there are some changes over time in Netflix’s data. The longer an episode remains on the service, the higher are the chances that someone tunes in months later. But even for the very first episode, which aired on January 6, live-ish viewing accounts for 85% of all views.

That episode amassed some 20 million viewing hours during the first six months of the year, with 17.7 million of those hours coming from live+7 viewing. The total number of hours Raw fans have tuned into long-tail catch-up streams outside of that seven-day window is just north of 21 million hours. This means that one single RAW match accounts for nearly as much live-ish viewing as more than two dozen matches resulted in catch-up viewing time combined.

To paraphrase a great 80s hit: Even on Netflix, live is live.

What else

Amazon is acquiring Bee AI gadget. The Bee bracelet records everything you say. What could possibly go wrong?

Read State of the Screens. Subscribe now for the latest insights from around the video advertising industry. (SPONSORED)

Peacock is getting expensive. The NBCUniversal-owned streaming service is hiking the price for both its ad-supported and its ad-free plan by $3 each.

Netflix revenue surpassed $11 billion in Q2. Looks like ad-supported streaming is working for Netflix.

YouTube brings in close to $10 billion in ad revenue in Q2. It’s definitely working for YouTube.

Vizio is becoming a Walmart house brand. The former smart TV market disruptor is essentially going to be Walmart’s Insignia.

Hollywood’s next hit: vertical movies. This is a fascinating Indiewire story about Hollywood filmmakers who shoot episodic content for vertical video apps.

Microsoft is shutting down its movie store. Xbox owners who bought films through the service can transfer them to Movies Anywhere.

Indiegogo is getting acquired. The crowdfunding platform is being bought by Gamefound, which specializes in crowdfunding board games.

That’s it

It’s been fairly cold in the Bay Area in recent days, so I have embraced all kinds of fall and winter recipes: Portuguese kale soup, Turkish lentil soup, beef stew with roasted beets, and the likes. All yummy, but I do hope it’s going to warm up again soon — if only so I can follow this recipe and try to make my own Horchata …

Thanks for reading, have a great weekend!

Was this email forwarded to you? To get Lowpass for free every week, sign up here.

Got a news tip? Simply respond to this email.

Interested in partnering with Lowpass? Check out our sponsorship page.

Reply